BASIC INFORMATION

This is the notes of the course “Provable Security”. The first few courses will be taught online. While subsequent courses are still unsettled.

I took this course last year, and I am pretty confident about my grasp of basic concepts like universal hash function, GL theorem, basic PRG, PRF constructions, etc. But contents like CRHF and PRP are still rather alien to me, so in this course I will review the previous contents and try to master the missing pieces. Also it is desireable to incopreate the previous notes and complete the notes altogegher.

| TEACHER | 郁昱,刘振 |

|---|---|

| LOCATION | 陈瑞球楼108 |

| CODE | C033728200203300M01 |

| ZOOM NUMBER | 284739677 |

| ZOOM CODE | 09333040 |

| LINK | https://zoom.com.cn/j/284739677 |

Homework

This section is dedicated to all homework and exercises throughout the lecture (along with their solutions of course).

18-19-2 Lecture 2

- Prove conditional LHL

- Non-existence of deterministic randomness extractor

- one-time message authentication code

- equivalence between min-entropy and collision entropy

For solutions please refer to Here.

18-19-2 Lecture 4

- Improving advantage in GL-theorem proof,i.e., difference between guessing game (exact preimage) and inverting game (any satisfying preimage).

- Exercies 6.1 6.2 6.4 6.6 from KL book

Answer can be found here.

19-20-2 Lecture 2

Existence of independent code here.

For an event \(E\), we use the indicator function

\[ I(E) = \begin{cases} 1 & E\\ 0 & \bar{E} \end{cases}\enspace. \]

Fix an \(i\), we bound the probability

\[ p = \Pr_{C}[\Pr_{r\leftarrow R_i^m}[Cr = 0] > \frac{1+\zeta}{2^n}]. \]

First we expand the inner probability expression

\[ p = \Pr_C[\frac{1}{\binom{m}{i}} (\sum_{r^{\prime}: |r^{\prime}| = i}I(Cr^{\prime} = 0)) > \frac{1+\zeta}{2^n}]. \]

And then we transform the form to facilitate Chebyshev’s Inequality. (It is easy to observe that \(\mathsf{E}_{C}[I(Cr=0)] = \frac{1}{2^n}\).)

\begin{align*} p &= \Pr_C[(\sum_{r^{\prime}: |r^{\prime}| = i}I(Cr^{\prime} = 0)) - \frac{\binom{m}{i}}{2^n} > \binom{m}{i} \frac{\zeta}{2^n}] \\ &\le \Pr_C[\left| (\sum_{r^{\prime}: |r^{\prime}| = i}I(Cr^{\prime} = 0)) - \frac{\binom{m}{i}}{2^n} \right| > \binom{m}{i} \frac{\zeta}{2^n}] \\ &\le \frac{\underset{C}{Var}{(\sum_{r^{\prime}: |r^{\prime}| = i}I(Cr^{\prime} = 0))}} {(\binom{m}{i} {\frac{\zeta}{2^n}})^2}\enspace. \end{align*}

Notice that for distinct \(r_1\) and \(r_2\), the random variable \(I(C r_1 = 0\) and \(I(C r_2 = 0\) are independent since \(I(C (r_1 - r_2)\) follows the same distribution as either of them. This means we can further expand the expression as

\[ p \le \frac{ \sum_{r^{\prime}: |r^{\prime}| = i}\underset{C}{Var}{(I(Cr^{\prime} = 0))}}{(\binom{m}{i} {\frac{\zeta}{2^n}})^2}\enspace. \]

Since once \(r\) is fixed, \(I(Cr = 0)\) follows the Bernoulli distribution with \(\mu = \frac{1}{2^n}\), we can further write the expression as

\begin{align*}~ p &\le \frac{ \binom{m}{i} \mu - \mu^2}{(\binom{m}{i} {\frac{\zeta}{2^n}})^2} \\ &\le \frac{2^n \binom{m}{i}^{-1}}{\zeta^2}\enspace. \end{align*}

Since \(i \le \frac{k}{2}\) and \(\binom{m}{i} \ge \binom{m}{k/2} \ge (\frac{m}{k/2})^{k/2}\), we have

\begin{align*} p &\le \frac{2^{n - \frac{k}{2} \log{\frac{2m}{k}}}}{\zeta^2} \\ &\le \frac{2^{n - \frac{k}{2} \log{\frac{m}{k}} + \frac{\log m}{2}}} {\zeta^2}\enspace. \end{align*}

Using a union bound on all possible values of \(i\), we conclude the proof of this lemma.

19-20-2 Lecture 5

PRG from LPN

Link: Lecture 5

Following usual LPN conventions, we define the LPN distribution \(LPN_{n,\mu}^m := (A, As+e)\), where \(A\leftarrow U_{m\times n}\), \(s\leftarrow U_n\), and \(e \leftarrow Ber_{\mu}^m\). The (\(n\), \(\mu\), \(m\))-DLPN assumption postulate that for any probablistic polynomial time distinguisher, the advantage of distinguishing \(LPN_{n,\mu}^m\) apart from uniform distribution is at most \(\epsilon = \mathsf{negl}\). We now construct a PRG from (\(n\), \(1/16\), \(m\))-DLPN assumption.

The construction is very simple. On \(4m + n\) bit input, the algorithm \(g\) produces \(4.2m\) bits of output from the following procedure:

- Parse the \(4m\) bits of input (denoted as \(r\)) as \(m\) 4-bit blocks, and produce noise \(e\) by taking the logical AND of each block.

- Produce first \(m\) bit by \(y_1 = A\cdot s + e\) where \(s\) is the remaining \(n\) bits of input.

- Produce the rest \(3.2m\) bits by using the universal hash function \(h\).

Note that the matrix \(A\) and hash function \(f\) are both public randomness, and can be considered as part of both input and output. We prove the pseudorandomness of output below.

Proof (sketch). We consider the more general case of generating \(\mu = 2^t\) Bernoulli noise from \(mt\) random bits. The aforementioned sampling algorithm actually wasted a lot of randomness, which can actually be recycled to facilitate a positive stretch.

Claim. The min-entropy of \(r\) conditioned on \(e\) is at least \(mt(1 - 2^{-\Omega{t}})\) except with probability \(e^{-m2^{-t}/3}\).

This can be shown from the following argument. By Chernoff bound, the probability of \(e\) having more than \(2^{-t+1}m\) ones is at most \(2^{-m 2^{-t}/3}\). Thus, conditioned on this event, at least \(m(1-2^{-t+1})\log(2^t-1)\) bits of \(r\) are unpredictable. Using the fact that \(\log(2^t-1) > \log(2^{t(1 - 2\Omega(t))})\) we can conclude that the conditional min-entropy of \(r\) in this case is at least \(mt(1 - 2^{-\Omega{t}})\).

The rest of the proof follows by a standard hybrid argument. In particular,

- Hybrid 1

- The algorithm \(g\) aborts whenever \(|e| > 2^{-t+1}m\). From the Chernoff bound, the output in this case is statistically close to the real distribution.

- Hybrid 2

- Replace hashed output \(h( r)\) with uniform randomness. The output is indistinguishable from Hybrid 1 from Leftover Hash Lemma.

- Hybrid 3

- Replace the first part of output by uniform randomness. The output is indistinguishable from Hybrid 2 from Decisional LPN assumption under constant noise rate.

- Hybrid 4

- Remove artifitial abort introduced in Hybrid 1. Once again, this is statistically indistinguishable from Hybrid 2. This is also the uniform distribution.

The constants in the aformentioned construction are chosen so that \( m (1 - 2^{-t+1}) \log(2^t-1) > m(t-1) + d\) where \(d = \omega(\log n)\) is an appropriate entropy loss.

CLPN and DLPN Equivalence

Link: Exercises Proof is in the link.

19-20-2 Lecture 6

Levin’s Trick: Exercise: Domain Extension for PRFs Proof is in the link.

18-19-2 Lecture 2

In this note, the content of this week’s lecture on provable security by Prof. Yu is summarized, from the handout and my own note taken at the lecture. Additionally, I will give my answers to the homework given at the end of this week’s handout.

Lecture Content

Several key concepts were introduced in this week’s lecture along with their definitions. These includes \(\varepsilon\) -security of private key encryption schemes, statistical distance, minimum entropy and unpredictability, randomness extractor, and leftover hash lemma. The reader might be already familiar with these concepts, and if that is the case, they should agree with my opinion that the theory of probability are heavily used in those definition and results.

Anyway, the following is derived from my notes taken during the lecture.

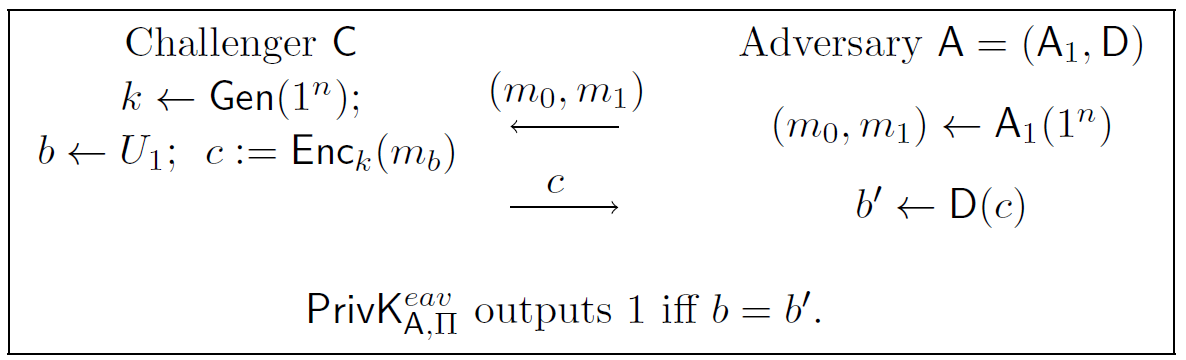

Indistinguishability

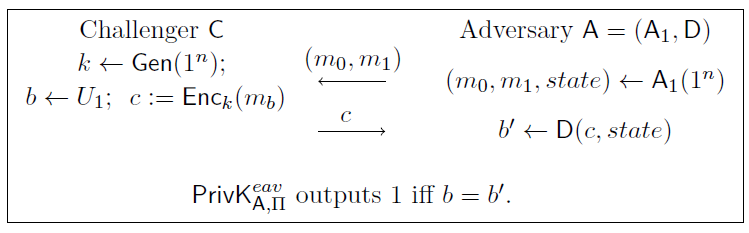

Indistinguishability and resilience to key recovery attack are the two primary means to defining the security of a encryption scheme. We define the following indistinguishability experiment:

There are several points to note here:

- Unlike the indistinguishability experiment in the public key

encryption scheme, the adversarial algorithm \(A=(A_1,D)\) here does not output a state. This is because the adversary’s power is unlimited, and therefore (in my opinion) the decryption algorithm can run the message generation algorithm again to get all the state information it needs, ergo the state need not to be passed.

- The advantage of the adversary here is defined as the probability

\(\Pr[\mathsf{PrivK}^{eav}_{\mathsf{A,\mathcal{P}i}}] - 1/2\).

Statistical Security

There are two ways to define statistical security (i.e. \(\varepsilon\) -secure), and they are equivalent. Note that in the indistinguishability experiment version of the definition, the probability \[ \Pr[\mathsf{PrivK}^{eav}_{\mathsf{A,\mathcal{P}i}}] < 1/2 + \varepsilon/2 \] implies \[ 1/2 - \varepsilon/2 < \Pr[\mathsf{PrivK}^{eav}_{\mathsf{A,\mathcal{P}i}}] < 1/2 + \varepsilon/2.\] This is because if the upper bound of the success probability is bounded, then the lower bound must follow the same margin. If not, negate the distinguisher with very low success probability will get an adversary that breaks the upper bound.

When an encryption scheme achieves \(0\) -security, we say that it is perfectly secure. Vernam’s Cipher (One-time pad) is such a cipher.

Statistical Distance (SD)

The definition of statistical distance is as follows: \[ \mathsf{SD}(X,Y) \overset{\text{def}}{=} 1/2 \sum_x{|\Pr[X=x]-\Pr[Y=x]|}, \]

and we say \(X\) is \(\varepsilon\) -close to \(Y\) if \(\mathsf{SD}(X,Y) < \varepsilon\).

There is also a lemma about the advantage limit of any distinguisher on two distributions with limited statistical distance. For random variables \(X\) and \(Y\) defined over set \(\mathcal{S}\), and for any distinguisher \(\mathsf{D} : \mathcal{S} \rightarrow \{0,1\}\), we have

\[ \left| \Pr[\mathsf{D}(X)=1]-\Pr[\mathsf{D}(Y)=1] \right| \leq \mathsf{SD}(X,Y). \] The proof is actually not hard, since the distinguisher \(\mathsf{D}\) is all-powerful, we can think of it as deterministic, and therefore (w.l.o.g. we assume \(\Pr[\mathsf{D}(X)=1]\geq\Pr[\mathsf{D}(Y)=1]\))

\begin{align*} |\Pr[\mathsf{D}(X)=1]-\Pr[\mathsf{D}(Y)=1]| &= |\sum_{x\in S:\mathsf{D}(x)=1}{\Pr[X=x]-\Pr[Y=x]} |\\ &\leq |\sum_{x\in S:\mathsf{D}(x)=1 \cap S:\Pr[X=x]\geq\Pr[Y=x]} {\Pr[X=x]-\Pr[Y=x]}|\\ &\leq |\sum_{x\in S:\Pr[X=x]\geq\Pr[Y=x]}{\Pr[X=x]-\Pr[Y=x]} |\\ &=\mathsf{SD}(X,Y)\enspace. \end{align*}

The two inequality holds if and only if the two sets are equal. (The last equality is a little tricky.)

The statistical distance \(\mathsf{SD}\) is a metric, meaning the following properties holds:

- non-negativity

- identity of indiscernibles

- symmetry

- triangle inequality.

Statistical distance also has the following additional properties:

- no greater than 1 (the equality holds if and only if the elements with positive probability in the two distributions does not intersect.)

- replacement: for every function \(f\), it holds that \(\mathsf{SD}(f(X),f(Y)) \leq \mathsf{SD}(X,Y)\). (the equality holds if and only if \(f\) is a bijective map.)

Statistical Security of OTP

Replacing the key-generation algorithm \(\mathsf{Gen}\) in OTP by an algorithm \(\mathsf{Gen}^{\prime}\) that draws key according to some distribution \(\tilde{K}\) that is \(\varepsilon\) -close to \(U_n\) will gives us an encryption scheme that is \(2\varepsilon\) -secure.

The proof is as follows, fix message \(m_0, m_1 \in \{0,1\}^n\), \(m_0 \neq m_1\). We have for any distinguisher \(\mathsf{D}\),

\begin{align*} |\Pr[\mathsf{D}(m_0 \oplus \tilde{K})=1] - \Pr[\mathsf{D}(m_1 \oplus \tilde{K})=1]| &\leq \mathsf{SD}(m_0 \oplus \tilde{K}, m_1 \oplus \tilde{K})\\ &\leq \mathsf{SD}(m_0 \oplus \tilde{K},m_0 \oplus U_n) + \mathsf{SD}(m_1 \oplus \tilde{K},m_0 \oplus U_n)\\ &\leq \mathsf{SD}(\tilde{K}, U_n) + \mathsf{SD}(\tilde{K}, U_n)\\ &= 2\varepsilon\enspace. \end{align*}

Unpredictability and Min-Entropy

Definition for unpredictability is as follows, a random variable \(X\) is \(\varepsilon\) -unpredictable if for any algorithm \(A\), we have \(\Pr[A(1^n) = X] \leq \varepsilon\).

Min-entropy is defined as \[ \mathbf{H}_{\infty}(X)\overset{\text{def}}{=} -\log(\max_{x\in \mathcal{X}}\Pr[X=x]).\]

There are also average min-entropy and conditional unpredictability definitions. For joint random variable \((X,Z)\), we say that \(X\) is \(\varepsilon\) -unpredictable given \(Z\) if for every algorithm \(A\) it holds that \(\Pr[A(1^n,Z)=X]\leq \varepsilon\). While the average min-entropy of \(X\) conditioned on \(Z\), denoted by \(\mathbf{H}_{\infty}(X|Z)\), is defined by \[ \mathbf{H}_{\infty}(X|Z) \overset{\text{def}}{=} \mathop{\mathbb{E}}_{z \leftarrow Z}(\max_{x\in \mathcal{X}} \Pr[X=x|Z=z]). \]

It holds that a random variable \(X\) is \(\varepsilon\) -unpredictable if and only if its min-entropy \(\mathbf{H}_{\infty}(X) \geq \log(1/\varepsilon)\).

Randomness Extractor

This part is not covered in the handout, as Prof. Yu added them to his slides that he personally said “specially prepared since so many students showed up in his class”. Therefore, I would not be able to get more detailed content apart from my notes.

First observe that a key with high min-entropy does not guarantee security. To see this, observe that if there is a distribution that always output \(0\) on the first bit, followed by \(n-1\) uniformly random bits. Now, if we use this distribution as the key distribution in place of the uniform distribution in OTP, and test it in the indistinguishability experiment, the adversary will always win, ergo the scheme is completely insecure. We want to have a randomness extractor, that given input with some min-entropy, gives output that is has some small statistical distance to the uniform distribution. That is, for a (\(n\), \(k\), \(m\), \(\varepsilon\))-randomness extractor, if its input is \(n\) -bit long and has min-entropy of \(k\), the output will be \(\varepsilon\) -close to \(U_m\). However, even for \(m=1\), \(k=n-1\), such a deterministic extractor does not exist. In order to see this, for any deterministic extractors, we can get the set \(S_0:\mathsf{Ext}(x) = 0\) and \(S_1:\mathsf{Ext}(x) = 1\), and w.l.o.g. assume \(|S_0|\geq|S_1|\). Then consider the distribution on \(\{0,1\}^{n}\) that has probability \(1/|S_0|\) when \(x\in S_0\) and \(0\) otherwise. The output of the extractor \(\mathsf{Ext}\) will have \(1/2\) statistical distance from \(U_1\).

And therefore to achieve our goal of randomness extractor, additional modification must be added. The approach introduced is randomness extractor with a seed (i.e. universal hash functions).

Universal Hash Function and Leftover Hash Lemma

The definition of universal hash function is as follows. \(\mathcal{H} \subseteq \{0,1\}^l\rightarrow\{0,1\}^t\) is a family of universal hash function if for any distinct \(x_1,x_2\in \{0,1\}^l\), it holds that

\[ \Pr_{h\overset{\$}{\leftarrow}\mathcal{H}}[h(x_1)=h(x_2)] \leq 2^{-t}.\]

For example, \(\mathcal{H}=\{h_a:h_a(x)\overset{\text{def}}{=}(a \cdot x)_{[t]}\}\) is a family of universal hash functions, and \(|\mathcal{H}|=2^{l}\).

The leftover hash lemma states that universal hash functions are good randomness extractors.

For any integers \(d \leq k \leq l\), let \(\mathcal{H} \subseteq \{0,1\}^l\rightarrow\{0,1\}^{k-d}\) be a family of universal hash functions. Then, for any random variables \(X\) defined over \(\{0,1\}^l\) with min-entropy no less than \(k\), it holds that

\[\mathsf{SD}(H(X),U_{k-d}|H) \leq 2^{-d/2-1},\]

where \(H\) is the random variable that is uniformly distributed over all members of \(\mathcal{H}\).

Prof. Yu skipped the proof at the lecture, but I think he will catch up with that in the next lecture. Nevertheless, I have read the proof and proved corollary 3.1 which is a conditional version of leftover hash lemma, and also the first homework. The key to the proof is to use a Cauchy-Schwartz inequality to create a quadratic term, which also introduces a square root.

One application of the leftover hash lemma is the privacy amplification. Prof. Yu also introduced another concept called non-malleable extractor, which is like \(\forall A,\forall s, A(s) \neq s\), and for \(X\) with min-entropy \(k\), we have

\[ (\mathsf{Ext}(X,U_d), \mathsf{Ext}(X,A(U_d)),U_d) \overset{\varepsilon}{\approx}(U_m, \mathsf{Ext}(X,A(U_d)),U_d). \]

Honestly, I have not grasped the idea of this definition until now. Maybe I will consult with others later.

Homework

The following is my solutions to the four homework problems in handout2

Prove Corollary 3.1 (Conditional Leftover Hash Lemma)

Suppose for integers \(d\leq k \le l\) and \(\mathcal{H}:\{0,1\}^{l}\to\{0,1\}^{k-d}\) be the same as assumed in leftover hash lemma (a universal hash function family). For any random variable \((X,Z)\) where \(X\) is over \(\{0,1\}^l\) with average min-entropy \(\mathbf{H}_\infty(X|Z) \geq k\) it holds that \[ \mathsf{SD}(H(X),U_{k-d}|H,Z)\le 2^{-d/2-1}\] where \(H\) is the random variable that is uniformly distributed over all members of \(\mathcal{H}\).

The proof is as follows. By \(\mathbf{H}_\infty(X|Z) \geq k\), we have that \[ \mathop{\mathbb{E}}_{z \leftarrow Z}(\max_{x\in \mathcal{X}} \Pr[X=x|Z=z]) \le 2^{-k}.\] Now lets analyze the statistical distance (let \(S=\{0,1\}^{k-d}\)),

\begin{align*} \mathsf{SD}&(H(X),U_{k-d}|H,Z) = \mathsf{SD}((H(X),H,Z),(U_{k-d},H,Z))\\ &=1/2\cdot\sum_{h\in\mathcal{H},z\in Z,s\in S}|\Pr[H(X)=s\land H=h\land Z=z]-\Pr[U_{k-d}=s\land H=h\land Z=z]|\\ &=1/2\cdot\sum_{h\in\mathcal{H},z\in Z,s\in S}|1/|\mathcal{H}|\cdot(\Pr[h(X)=s|Z=z]\cdot\Pr[Z=z]-1/|S|\cdot\Pr[Z=z])|\\ &=1/2\cdot\sum_{h\in\mathcal{H},s\in S}|\frac{1}{\sqrt{|\mathcal{H}||S|}}|\cdot|\sum_{z\in Z}(\frac{\sqrt{|S|}}{\sqrt{|\mathcal{H}|}}\cdot(\Pr[h(X)=s\land Z=z]-1/|S|\cdot\Pr[Z=z]))|\\ &\leq1/2\cdot\left( \sum_{h\in\mathcal{H},s\in S}(\sum_{z\in Z}\Pr[Z=z]^2(\frac{|S|}{|\mathcal{H}|}\Pr[h(X)=s|Z=z]^2-\frac{2\Pr[h(X)=s|Z=z]}{|\mathcal{H}|}+\frac{1}{|S||\mathcal{H}|})) \right)^{1/2}\\ &=1/2\cdot\left(\sum_{z\in Z}\Pr[Z=z]^2[(\sum_{h\in\mathcal{H},s\in S}\frac{|S|}{|\mathcal{H}|}\Pr[h(X)=s|Z=z]^2)-1]\right)^{1/2}\\ &\leq 1/2\cdot\left(\sum_{z\in Z}\Pr[Z=z]^2\cdot\max_{x\in X}\Pr[X=x|Z=z]\cdot|S|\right)^{1/2}\\ &\leq 2^{-d/2-1}. \end{align*}

The final inequality relies on the fact that \(\Pr[Z=z]^2\leq\Pr[Z=z]\).

Non-Existence of Deterministic Randomness Extractor

For any deterministic function \(h:\{0,1\}^n\to\{0,1\}\), define the sets \(S_0:=\{x|h(x)=0\}\) and \(S_1:=\{x|h(x)=1\}\). Then there must exist a set \(S_b\) such that \(|S_b|\geq2^{n-1}\). Construct such a distribution that has probability \(1/|S_b|\) for any element in \(S_b\) and \(0\) for \(S_{1-b}\). The distribution has min-entropy at least \(n-1\). But applying such input to \(h\) would get result that has \(1/2\) statistical distance to \(U_1\).

One-Time Message Authentication Code

We only need to compute the probability that the adversary succeeds. Consider that such event happens would indicate \(w_2\cdot(m-m^\prime)=\sigma-\sigma^\prime\), and therefore the adversary can completely compute \(W_2\). And completely succeeding in getting \(W_2\) would indicate such an attack is successful. This gives us the crude equivalence of the two events. The probability of successfully guessing \(W_2\), conditioned on \(Z\) is at most \(2^n \cdot 2^{-n-t}\), completing the proof.

Equivalence Between Min-Entropy and Collision Entropy

For random variable \(X\), define the collision probability

\[ \mathsf{CP}(X)\overset{\text{def}}{=}\sum_x{\Pr[X=x]^2}\enspace, \]

and collision entropy

\[ \mathbf{H}_2(X)=-\log(\mathsf{CP}(X))\enspace.\]

Show that for any \(X\) with \(\mathbf{H}_2(X)\geq k\) and any \(0<\delta<1\) there exists some \(Y\) with \(\mathbf{H}_\infty(Y)\ge k-\log(1/\delta)\) such that \(\mathsf{SD}(X,Y)\leq \delta\).

First observe that \(\sum_x{\Pr[X=x]^2}\le 2^{-k}\) implies \(\mathbf{H}_0(X)\ge k\), which implies \(|\mathcal{X}|\ge 2^k\) (the sample space). Then define the set \(S:=\{x|\Pr[X=x]\le 1/\delta \cdot 2^{-k}\}\). Define the distribution \(Y\) such that for every \(x\in \mathcal{X}\setminus S\), \(\Pr[Y=x]=1/\delta \cdot 2^{-k}\). And distribute the difference between \(X\) in to the values \(x\in S\) on top of \(\Pr[X=x]\) while keeping \(\Pr[Y=x]\le 1/\delta\cdot 2^{-k}\). This is possible since \(|\mathcal{X}|\ge 2^k\). The resulting distribution will have statistical distance less than \(\delta\).

18-19-2 Lecture 3

In this note, the content of this week’s lecture on provable security by Prof. Yu is summarized, from the handout and my own note taken at the lecture. Additionally, I will try to prove the equivalence of semantic security and indistinguishability here.

Lecture Content

As some important proofs were skipped in the last lecture, the lecture started by explaining the proof of the leftover hash lemma in the second handout. The rest of the lecture continued on the computational approach to modern cryptography (computational complexity based approach), and introduced very important concepts like pseudorandom generator, replacement lemma, and hybrid argument. The lecture ended right after hybrid argument was introduced and explained in detail.

Proof of Leftover Hash Lemma

Recall that the leftover hash lemma states that for any integers \(d \leq k \leq l\), let \(\mathcal{H} \subseteq \{0,1\}^l\rightarrow\{0,1\}^{k-d}\) be a family of universal hash functions. Then, for any random variables \(X\) defined over \(\{0,1\}^l\) with min-entropy no less than \(k\), it holds that \[ \mathsf{SD}(H(X),U_{k-d}|H) \leq 2^{-d/2-1},\] where \(H\) is the random variable that is uniformly distributed over all members of \(\mathcal{H}\).

Informally, the leftover hash lemma states that universal hash function is a \((l,k,k-d,2^{-d/2-1})\) -randomness extractor. The proof uses Cauchy-Schwartz inequality, which is quite common in the reductions on lattice, according to Wenling, since the equality can be achieved (whey the two vectors have the same direction), and can be easily extended to the complex number field. In my opinion, the core part of the proof is to analyze the collision probability. The proof is as follows (for convenience I denote the set \(\{0,1\}^{k-d}\) by \(S\)):

\begin{align*} \mathsf{SD}&(H(X),U_{k-d}|H) = \mathsf{SD}((H(X),H),(U_{k-d},H))\\ &=1/2\sum_{s\in\{0,1\}^{k-d},h\in\mathcal{H}}|\Pr[H(X)=s\land H=h]- \Pr[U_{k-d}=s\land H=h]|\\ &=1/2\sum_{h\in\mathcal{H}}1/|\mathcal{H}|\cdot\sum_{s\in S} | \Pr[H(X)=s|H=h]-1/|S||\\ &=1/2\sum_{s\in\{0,1\}^{k-d},h\in\mathcal{H}} \frac{1}{\sqrt{|S||\mathcal{H}|}} \cdot|\frac{\sqrt{|S|}}{\sqrt{|\mathcal{H}|}} (\Pr[H(X)=s|H=h]-1/|S|)|\\ &=1/2(\sum_{s\in\{0,1\}^{k-d},h\in\mathcal{H}} \frac{|S|}{|\mathcal{H}|} (\Pr[H(X)=s|H=h]^2-2\Pr[H(X)=s|H=h]/|S|+1/|S|^2))^{1/2}\\ &=1/2((\sum_{s\in\{0,1\}^{k-d},h\in\mathcal{H}} \frac{|S|}{|\mathcal{H}|} \Pr[H(X)=s|H=h]^2)-1)^{1/2}\\ &=1/2(|S|(\sum_{h\in\mathcal{H}} \frac{1}{|\mathcal{H}|}\sum_{s\in S} \Pr[H(X)=s|H=h]^2)-1)^{1/2} \end{align*}

In this step, we should consider the meaning of the quadratic probability term. It essentially means the expectation of the probability of two identically independent variables sampled according to distribution \(X\), after applied to \(h\in\mathcal{H}\), collides. The expectation is over the uniformly random choice of \(h\). According to the fact that \(\mathcal{H}\) is a family of universal hash functions, we can split the probability into two cases.

- Case 1: \(X_1=X_2\) (we denote the two random variables by \(X_1\) and

\(X_2\)). In this case, the collision will always happen.

- Case 2: \(X_1 \neq X_2\). In this case, by the property of universal

hash function, for any \(x_1\neq x_2\), the probability of \(h(x_1)=h(x_2)\) when applying a uniformly random \(h\leftarrow\mathcal{H}\) is less than \(2^{k-d}\).

For convenience, denote \(\mathbf{Collide}\) the event that such collision happens (the sample space is \(S\times\mathcal{H}\)), we have

\begin{align*} \Pr[\mathbf{Collide}] &= \Pr[X_1= X_2]\cdot \Pr[\mathbf{Collide}|X_1=X_2]+\Pr[X_1\neq X_2]\cdot \Pr[\mathbf{Collide}|X_1\neq X_2]\\ &\leq \Pr[X_1=X_2]+\Pr[\mathbf{Collide}|X_1\neq X_2]\\ &\leq \sum_{s\in S}\Pr[X_1=s]^2+2^{^{-k+d}}\\ &\leq \max_{s\in S}\Pr[X=s] + 2^{^{-k+d}}\\ &\leq 2^{-k}+2^{-k+d}\enspace. \end{align*}

Putting this into the previous equation, we have

\begin{align*} \mathsf{SD}(H(X),U_{k-d}|H) &\leq 1/2 (|S|(\sum_{h\in\mathcal{H}}\frac{1}{|\mathcal{H}|} \sum_{s\in S}\Pr[H(X)=s|H=h]^2)-1)^{1/2}\\ &=1/2(2^{k-d}(2^{-k}+2^{-k+d})-1)^{1/2}\\ &=2^{-d/2-1}\enspace. \end{align*}

And that completes the proof.

Other Points that Arise in the Proof

The above was exactly as explained in the lecture, except for one detail. I think the probability \(\Pr[X_1=X_2]\) can be enlarged pretty easily by writing it in the quadratic form and enlarging every term to a probability times the maximumly possible probability, and nothing is wrong with this notion. However, in the lecture, a different approach is used. In particular, Prof. Yu first introduced a lemma concerning random variables. The lemma states that Any X of min-entropy k can be represented as a convex combination of flat distributions over sets of size \(2^k\). The notion written on the blackboard that day was \[ X = p_1X_1+p_2X_2+\ldots+p_mX_m.\] In the above equation, we have \(\sum_{i\in[m]}p_i=1\), ergo convex combination. But the idea was that with probability \(p_i\), \(X\) will take value from random variable \(X_i\). Hanlin explained to me that this can be treated as breaking the large probability into smaller ones, and placing them in different distributions. When thinking in this way, the collision probability can be easily understood. In particular, if all the \(m\) subsets are the same, the collision probability is exactly \(2^{-k}\). However, if there is any event in the set that takes probability less than \(2^{-k}\), the total collision probability will be less than \(2^{-k}\). This can easily be obtained by observing \((p_1+p_2)^2 \geq p_1^2 +p_2^2\). According to Hanlin, the convex combination approach is simply a more formal way of stating this fact.

There is another way of deriving this fact. There is a uniform way of defining entropy, called Renyi entropy, defined as \[ \text{H}_\alpha(X)=\frac{1}{1-\alpha}\log\left(\sum_{i\in[n]}p_i^\alpha\right).\] When \(\alpha=0\), we get the max-entropy, which is the logarithmic of the size of sample space; when \(\alpha \to 1\), we get Shannon Entropy (I have not proved it myself); when \(\alpha=2\), we get collision entropy, which is exactly what we need in the previous example; and when \(\alpha \to \infty\), we get min-entropy. More importantly, for any discrete random variable \(X\) with finite sample space (I added the constraint myself, since currently I know under such conditions the fact holds), we have the following relations \[ \text{H}_0(X)\ge\text{H}_1(X)\ge\text{H}_2(X)\ge\ldots\ge\text{H}_\infty(X). \] The equality holds when \(X\) is uniformly random. From this fact we can easily derive that in the proof of leftover hash lemma, we have \[ \Pr[X_1=X_2]=2^{-\text{H}_2(X)}\le 2^{-\text{H}_\infty(X)}=2^{-k}. \] And that is the second way to derive the probability, which seems more natural. In fact, the requirement on min-entropy in the lemma can be relaxed to requiring the random variable \(X\) have collision entropy at least \(k\).

Privacy Amplification: an Application of Leftover Hash Lemma

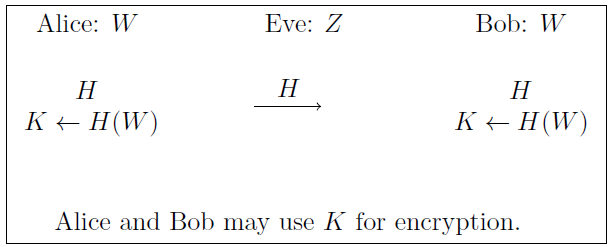

The problem setting of privacy amplification is that when the communicating parties share a secret \(W\), which has some information leaked to an eavesdropping adversary, which we denote by \(Z\). By a corollary of the leftover hash lemma, when \(\text{H}_\infty(W|Z)\geq k\), applying a universal hash function \(\mathcal{H}:\{0,1\}^l\to\{0,1\}^{k-d}\) will get output that is \(2^{-d/2-1}\) -close to uniform random distribution \(U_{k-d}\), conditioned on \(W\) and \(H\). And that can be used as good source of randomness (at least it can be used as key in Vernam’s cipher to get statistically-secure encryption). The process is illustrated in the figure below.

Figure 2: Privacy Amplification

However, this scheme is only secure against an eavesdropping only adversary. An active adversary may temper with the message sent between parties, and easily make the two parties have inconsistent results. Prof. Yu then mentioned that using message authentication code, parties can detect message tempering. And that is also is the third problem of the second lecture’s problem set.

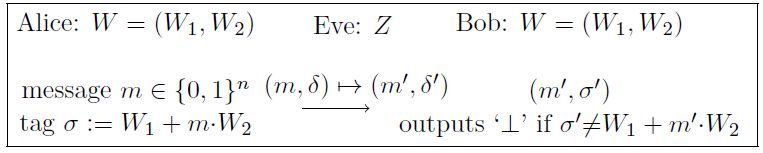

One-Time Message Authentication Code

By adding message authentication code (MAC), the receiving party can effectively detect tempering during the transmission with high probability. The third problem of the problem set in the previous lecture proposes a MAC scheme when the two parties share a secret and only use it once (ergo one-time). The scheme works as follows:

Figure 3: One-time MAC (IT-MAC)

The two parties of communication share a \(2n\) bit secret, denoted by \(W=(W_1,W_2)\), of which some information \(Z\) is leaked to an adversary. The average min-entropy of \(W\) conditioned on \(Z\) is at least \(n+t\), namely, \(\text{H}_\infty(W|Z) \ge n+t\). It can be proved that in this scheme, for any message \(m\), the adversary’s success probability

\[ \Pr_{(w_1,w_2)\gets W,z\gets Z}[m^\prime=A(m,\sigma,z):m\ne m^\prime\land\sigma^\prime=w_1+m^\prime\cdot w_2]\le 2^{-t}. \]

However, as Prof. Yu mentioned in his lecture, the min-entropy of the shared secret is at least \(n+t\) bits, while the constructed scheme can only guarantee at least \(t\) bit of security (I do not know for sure such notion is correct, but from the $ε$-secure notion in the previous lecture I am confident about that). In other words, there is a \(n\) bit entropy loss. He then stated by using pseudorandomness, such problem can be solved.

Modern Cryptography: Computational Approach

The content of the third handout starts from here, meaning the previous contents focus on perfect or statistical security, which although being secure against all-mighty adversaries, is utterly inefficient in practice. In particular, notice that the key space of a perfectly-secure encryption scheme has to be at least as large as its message space. This means that if we consider the key and message as binary strings, the key must be at least as long as the message, and that is obviously ineffective.

The modern approach of cryptography (i.e. the computational-complexity based approach) resolves this problem by relaxing the notion of security, in particular, only requiring security against efficient adversaries (since the asymptotic notion is considered in most cases, this means the advantage of the adversary ends in time polynomial in the security parameter). In this way, not only the efficiency can be improved, but also many other interesting and useful constructions can be built. (Recall that in the first course of Prof. Liu’s Modern Cryptographic Algorithm, it is explained that assuming one-way function exists, the entire symmetric cryptography can be constructed, e.g. pseudorandom generator, IND-CPA secure encryption etc.)

The lecture has different focus with the third handout. In particular, the handout proved several facts concerning the indistinguishability encryption test in the KL book, namely, any \(\mathsf{PPT}\) adversary cannot guess with probability better than negligible one bit of the plaintext given the ciphertext in an indistinguishable encryption scheme. Another fact is one very similar to semantic security, except that auxiliary information is not considered. Once again, the Prof. Yu proves in the handout that the indistinguishability definition implies this semantic security-like definition. But the proof of the equivalence between indistinguishability and semantic security was not mentioned in the handout. I think in the lecture all of the above content was gone through in less than five minutes. The focus here was on computational-indistinguishability (the more general definition, on any distributions) and pseudorandom generator (which comes with the first hybrid argument proof).

Computational Indistinguishable Encryptions

Similar to the definition in the previous lecture, we define the indistinguishability experiment for private-key encryption scheme \(\mathcal{P}i = (\mathsf{Gen},\mathsf{Enc},\mathsf{Dec})\) with respect to \(\mathsf{PPT}\) adversary \(A=(A_1,D)\) here.

Figure 4: Symmetric Key Encryption Security Experiment

Note that in this experiment, since \(A\) is not all-mighty, and could use randomness in \(A_1\), state information (e.g. random coin used) is passed from \(A_1\) to \(D\). We call the encryption scheme \(\mathcal{P}i = (\mathsf{Gen},\mathsf{Enc},\mathsf{Dec})\) has indistinguishable encryptions in the presence of an eavesdropper if for all \(\mathsf{PPT}\) adversaries \(A\), there exists a negligible function \(negl(\cdot)\) such that \(\Pr[\mathsf{PrivK}_{A,\mathcal{P}i}^{eav}=1]\le 1/2 + negl(\kappa)\), the probability is over the choice of key \(k\), bit \(b\), randomness used in the encryption, and randomness used in \(A\). An alternative way of defining this is to state that for all \(\mathsf{PPT}\) adversaries \(A\), there exists a negligible function \(negl(\cdot)\) such that \(\Pr[D(1^\kappa,\mathsf{Enc}_k(m_0),state)=1]-\Pr[D(1^\kappa,\mathsf{Enc}_k(m_1),state)=1]\le negl(\kappa)\), where \((m_0,m_1,state)\gets A_1(1^\kappa)\). The proof is similar to the one in the previous lecture. One point to note though, is that in the previous proof, \(m_0\) and \(m_1\) are arbitrary so long as they are not identical. This is because the definition considered all possible adversaries, and therefore it is equivalent to the case when \((m_0,m_1)\gets A_1(1^\kappa)\).

Pseudorandom Generator

The definition of pseudorandom generator is as follows. Let \(l(\cdot)\) be a polynomial and let \(g\) be a deterministic polynomial-time algorithm such that upon any input \(s\in\{0,1\}^n\), the algorithm \(g\) outputs a string of length \(l(n)>n\). We say that \(g\) is a pseudorandom generator (PRG) if for all \(\mathsf{PPT}\) distinguishers \(D\), there exists a negligible function \(negl(\cdot)\) such that

\[ |\Pr[D(g(U_n)=1)-\Pr[D(U_{l(n)})=1]|\leq \mathsf{negl}(n), \]

where the probability is take over the random coins used by \(D\) and \(U_n\) (respectively \(U_{l(n)}\)). The difference between the output and input lengths \(l(n)-n\) is called the stretch factor of \(g\).

An Alternative Definition of PRG’s Security

Prof. Yu then introduced an alternative definition of PRG, namely \((t,\varepsilon)\) n-secure PRG. This is similar to the \((t,\varepsilon)\) -indistinguishable encryption in the handout. The definition states that if for any \(\mathsf{PPT}\) distinguisher \(D\) of running time at most \(t\), the advantage of the distinguisher on \(g(U_n)\) and \(U_{l(n)}\) is at most \(\varepsilon\). Prof. Yu then mentioned that when setting the parameters \(t=n^{\omega(1)}\) and \(\varepsilon=n^{o(1)}\), the two definitions are equivalent. (Right now I am convinced that \((t,\varepsilon)\) -secure implies the previous definition, but remain skeptical whether it is possible to construct an advantage that becomes non-negligible when \(t\) becomes super-polynomial. But this seems not worthy of pursuing compared to the definitions and hybrid argument to be introduced later.)

At this point, Prof. Yu mentioned in the lecture (and also in the handout), that PRG’s security is only guaranteed against efficient adversaries. Consider \(g:\{0,1\}^n\to\{0,1\}^{l(n)}\) as a PRG and an adversary \(D: x\mapsto x\in g(\{0,1\}^n)\), then the advantage of the adversary is

\begin{align*} \Pr[D(g(U_n))=1]-\Pr[D(U_{l(n)})=1] &= 1 - |g(\{0,1\}^n)|/2^{l(n)}\\ &\ge 1-2^{n-l(n)}\\ &\ge 1/2\enspace. \end{align*}

The last inequality holds since the stretch of the PRG is at least \(1\). This means the distinguisher \(D\) has constant advantage, and therefore the PRG \(g\) is definitely not secure in this case.

Replacement Lemma

The \((t,\varepsilon)\) -security of PRG enables a very versatile lemma called replacement lemma. I remember Prof. Yu mentioned this some afternoon in the lab the year before. He wrote this on the window of the lab and I remembered he asked someone to answer that. Anyway, the lemma states that if distribution \(X\) and \(Y\) is \((t,\varepsilon)\) -indistinguishable, and function \(f\) (defined over the union of the two distributions’ sample space) is \(T\) -computable, then the derived distribution \(f(X)\) and \(f(Y)\) is at least \((t-T,\varepsilon)\) -indistinguishable.

The proof is actually rather simple. Suppose that the result does not hold, then by contradiction, there exists a distinguisher \(D\) that runs in time at most \(t-T\), and distinguishes the two distributions with probability larger than \(\varepsilon\), namely \[ |\Pr[D(f(X))=1]-\Pr[D(f(Y))=1]|>\varepsilon. \] This already implies contradiction to the assumption. In order to see this, consider a distinguisher \(D^\prime(\cdot)=D(f(\cdot))\). This distinguisher will run in time at most \(t\), but will distinguish \(X\) and \(Y\) with probability greater than \(\varepsilon\). And therefore the distribution \(f(X)\) and \(f(Y)\) is at least \((t-T,\varepsilon)\) -indistinguishable.

Note that this lemma can be used to explain the replacement property of statistical distance. Prof. Yu explained this in brevity but I think I can elaborate a little here. First consider the equivalent definition of statistical distance as the maximum advantage among all distinguishers (no constraint on computational power here), and that could be roughly translated into \((\infty,\mathsf{SD}(X,Y))\) -indistinguishable. By applying substracting a \(T\) from the infinity limit of the running time, no actual limit is applied to the distinguisher. Now, we have when \(\mathsf{SD}(X,Y)\ge \varepsilon\), \(f(X)\) and \(f(Y)\) are at least \((\infty,\varepsilon)\) -indistinguishable, the advantage of any distinguishers on these two distributions is at most \(\varepsilon\). By the fact that the maximum of advantage is just statistical distance, we get \(\mathsf{SD}(f(X),f(Y)) \le\varepsilon=\mathsf{SD}(X,Y)\).

Stretching the Output Length of PRG: Hybrid Argument

By sequentially composing PRG to itself, a PRG with small stretch can be extended to get arbitrarily long pseudorandom bits. This is presented in the following lemma.

Let

\begin{align*} g:\{0,1\}^{n}&\to\{0,1\}^{n+s(n)}\\ s_i&\mapsto(s_{i+1},r_{i+1})\enspace, \end{align*}

where \(s_i,s_{i+1}\in\{0,1\}^n\), \(r_{i+1}\in\{0,1\}^{s(n)}\) be a \((t(n),\varepsilon(n))\) -secure PRG, and for any \(q(n)\in\mathbb{N}\), define

\begin{align*} g^q:\{0,1\}^n&\to\{0,1\}^{n+q(n)s(n)}\\ s_0&\mapsto(s_{q(n)},r_{q(n)},r_{q(n)-1},\ldots,r_1)\enspace, \end{align*}

where for \(0\le i\le q(n)-1\), iteratively compute \((s_{i+1},r_{i+1}):=g(s_i)\). Then, we have that \(g^{q(n)}\) is a \((t(n)-q(n)\cdot\mathsf{poly}(n), q(n)\cdot\varepsilon(n))\) -secure PRG, where \(\mathsf{poly}(n)\) is the running time of computing function \(g\).

The proof of this lemma demonstrated hybrid argument, which is extensively used in the field of cryptography. And since this is the first time it appears, it is worthwhile to pay more attention to that. I found that by using replacement lemma, some of the details of the proof in the handout can be hidden. The following is my slightly modified proof.

Like in the handout, we define the following distributions (note by placing the old output in the right hand side, the output of the function can be depicted as growing to the left, leaving pseudorandom stretches on the right hand side, and the notion actually aligned the seeds on the left hand side, the blank space on the right side can be filled with anything, so long as they are identically distributed, the adjacent two distributions will only have one iteration’s difference. I think this is the intuition behind constructing the series of distributions, a series of padded mimic snapshots along with the growth of the pseudorandom bits.)

\begin{align*} H_0&\overset{\text{def}}{=}g^q(U_n)\\ H_1&\overset{\text{def}}{=}(g^{q-1}(U_n),U_s)\\ H_2&\overset{\text{def}}{=}(g^{q-2}(U_n),U_{2s})\\ &\vdots\\ H_{q-1}&\overset{\text{def}}{=}(g(U_n),U_{(q-1)s})\\ H_{q}&\overset{\text{def}}{=}U_{n+qs}\enspace.\\ \end{align*}

After this, we can observe that for any \(i\in[q]\), the adjacent distributions \(H_i\) and \(H_{i-1}\) can be derived by applying $gq-i to the distribution in the assumption, namely, \(g(U_n)\) and \(U_{n+s}\), and padding \(U_{(i-1)s}\) to the right hand side of the random variables. By replacement lemma, \(g(U_n)\) and \(U_{n+s}\) is \((t,\varepsilon)\) -indistinguishable, then the resulting distribution is \((t-(q-i)\cdot\mathsf{poly}(n),\varepsilon)\) -indistinguishable, which implies it is \((t-q\cdot\mathsf{poly}(n),\varepsilon)\) -indistinguishable. Then by using the triangle inequality, we have for any probabilistic distinguisher \(D\) with running time no more than \(t-q\cdot\mathsf{poly}(n)\), the advantage of \(D\) distinguishing $H_0 and \(H_q\) is

\begin{align*} |\Pr[D(H_0)=1]-\Pr[D(H_q)=1]| &\leq \sum_{i=1}^q|\Pr[D(H_{i-1})=1]-\Pr[D(H_i)=1]|\\ &\leq q\cdot\varepsilon\enspace, \end{align*}

and that completes the proof.

18-19-2 Lecture 4

In this note, the content of this week’s lecture on provable security by Prof. Yu is summarized, from the handout and my own note taken at the lecture. This week’s lecture focuses on hardcore predicate of one-way function and Goldreich-Levin Theorem.

Lecture Content

In this lecture the main focus is on the theoretical construction of pseudorandom generators. Namely, a lemma concerning hardcore bit of one-way permutation implies pseudorandom generator (which works the other way as well) and Goldreich-Levin theorem were introduced. The proof of Goldreich-Levin theorem uses a concept called list decoding, which is a little mind-blowing for me. The lecture begins by giving hints on the last homework of the second lecture.

Equivalence Between Min-Entropy and Collision Entropy

In the first ten minute of the lecture, Prof. Yu explained to the class how to give answer to the fourth question of the homework in the second handout. The problem is that given a distribution \(X\) with collision-entropy \(\mathbf{H}_2(X)\geq k\), \(\forall\delta: 0< \delta <1\), it is possible to construct another distribution \(Y\) such that \(\mathbf{H}_\infty(Y)\geq k-\log(1/\delta)\), and \(\mathsf{SD}(X,Y)\le \delta\).

The answer to this question is given in the note of lecture 2. I think Prof. Yu’s answer implies that the rest of the set \(S:=\{x|\Pr[X=x]>1/\delta\cdot2^{-k}\}\) can be ignored in the distribution of \(Y\). However, I think this will make the question rather disappointing, since constructing another distribution over the exact sample space of \(X\) seems like a stronger guarantee. This is why I argued about the upper bound of minimum probability in the previous answer. This guarantees that when putting the extra probability to the probability over the set \(S\), there exists an assignment that does not exceed the maximum probability requirement \(\Pr[Y=x]\le 1/\delta\cdot2^{-k}\).

Another point to note is that the result \(\Pr[X\in S]<\delta\) can be treated as the result of Markov’s inequality. This is because we can treat the collision probability as the expectation of random variable \(\Pr[X]\), which is no more than \(2^{-k}\). And ergo the result.

One-Way Functions and Permutations

The definition of one-way function here is the same with that given in Prof. Liu’s lecture. Namely, given a function family \(f:\{0,1\}^{n}\to\{0,1\}^{l(n)}\) is a one-way function ensemble if it is

Easy-to-Compute: \(f\) can be computed by some algorithm in time \(\mathsf{poly}(n)\),

Hard-to-Invert: for every \(\mathsf{PPT} A\), there exists a negligible function \(negl(\cdot)\) such that

\[ \Pr_{X\gets U_n,x^\prime\gets A(1^n,f(X))}[f(x)=f(x^\prime)]\le negl(n). \]

Despite the similarity, Prof. Yu did argued in the handout that the more formal definition, where the domain and ranges are arbitrary sets and explicit sampling algorithms may be needed to sample a random element over the domain.

An Intuitive Interpretation of Implication

The relationship between one-way function exists (denoted by \(\mathsf{OWF}\)) and \(\mathcal{P}\ne\mathcal{NP}\) was also mentioned in the class. \(\mathsf{OWF}\) implies \(\mathcal{P}\ne\mathcal{NP}\), or written in a more standard form, \(\mathsf{OWF}\le\mathcal{P}\ne\mathcal{NP}\). In my intuitive opinion, this can be interpreted as there are two big truth tables with some entries’ value unknown. But from some evidence we can conjecture that they are true. Now the stronger assumption (the one that implies others) has more entries that are conjectured correct, and the ones that need to be conjectured correct is only a subset of the first one. This already explained the first one’s conjecture correctness implies the second one’s correctness. What’s more, if by contradiction, an entry of the second one is wrong (contradicting the assumption), then we can surely say that the first conjecture is not correct (since all the conjectured entries need to be correct to make the assumption correct), which also means that the contradicting entry is more ’lethal’ than the other entries outside the subset in the first assumption. Since finding a more powerful false entry is harder, this explains why intuitively, the implies sign ‘\(\le\)’ can be understood as “the hardness is lesser or equal than”.

One-Way Functions based on Different Assuptions

There are different assumption on which one-way functions can be constructed. Some of them are listed in the handout, they are

- Integer Factorization,

- Subset Sum, and

- Discrete Logarithm.

The first and third one have been extensively studied and tested in practice.

Hard-core Predicates of One-Way Functions

The definition of hard-core predicate is as follows. A polynomial-time computable predicate \(h_c:\{0,1\}^n\to \{0,1\}\) is called a hard-core predicate of a function \(f\) if for every \(\mathsf{PPT}\) algorithm \(A\), there exists a negligible function \(negl(\cdot)\) such that \[ \Pr_{X\gets U_n}[A(1^n,f(X)=h_c(X)]\leq 1/2+negl(n), \] where the probability is take over the choice of \(X\) and the random coins of \(A\).

Note that hard-core predicate exists implies one-wayness. The following theorem states that for one-way permutations, hard-core predicates imply the explicit construction of pseudorandom generators. The theorem is as follows.

If a permutation \(f:\{0,1\}^n\to\{0,1\}^n\) has a hard-core predicate \(h_c:\{0,1\}^n\to\{0,1\}\), then the function \(g(x) = (f(x),h_c(x))\) is a pseudorandom generator with a single bit stretch.

The proof given in the handout concerns deterministic distinguisher of pseudorandomness, and imposes stronger limit to the distinguisher of pseudorandomness (in that proof if the hard-core predicate is \((t(n),\varepsilon(n))\) -hard then the output of \(g(\cdot)\) is \((t(n)/2,\varepsilon(n))\) -indistinguishable from \(U_{n+1}\)). I tried to improve the proof by concerning \(\mathsf{PPT}\) adversaries and remove the loss in running time limit. The proof is as follows.

Suppose by contradiction, there is a \(\mathsf{PPT}\) adversary \(A\) of running time \(t(n)\) that can distinguish pseudorandomness such that

\[ \Pr_{X\gets U_n}[A(f(X),h_c(X))=1]- \Pr_{X_1\gets U_{n}, X_2\gets U_1}[A(X_1,X_2)=1]> \varepsilon(n)\enspace. \]

Observe the latter probability can be written as \[ 1/2\cdot\Pr[A(X_1,h_c(X_1))] + 1/2\cdot\Pr[A(X_1,1\oplus h_c(X_1))], \] which means \[ 1/2\cdot\Pr_{X\gets U_n}[A(f(X),h_c(X))=1]-1/2\cdot\Pr[A(X_1,1\oplus h_c(X_1))]>\varepsilon(n)\enspace. \] Now construct a \(\mathsf{PPT}\) algorithm \(D\) that computes the hardcode bit of input, given the output of the one-way permutation. After \(D\) gets its input \(f(X)\), it samples a random bit \(b\gets U_1\), and then calls \(A\), and get \(b^\prime \gets A(f(X),b)\). If \(b^\prime = 1\), output \(b\); else, output \(1\oplus b\).

The probability that \(D\) succeed is as follows,

\begin{align*} \Pr_{X,b,r}[\text{D wins}]&=\Pr[b=h_c(X)]\cdot \Pr[b^\prime=1|b=h_c(X)]+\Pr[b=1\oplus h_c(X)]\cdot \Pr[b^\prime=0|b=1\oplus h_c(X)]\\ &=1/2\cdot\Pr[A(f(X),h_c(X))=1]+ 1/2\cdot(1-\Pr[A(f(X),1\oplus h_c(X))=1])\\ &>1/2 + \varepsilon(n)\enspace. \end{align*}

And that contradicts the assumption that \(h_c(\cdot)\) is a (\(t(n)\),\(\varepsilon(n)\))-hard-core predicate for one-way permutation \(f\).

Reverse Thinking

Note that the previous theorem essentially states that next bit unpredictability implies pseudorandomness. Actually the result works in the other direction as well. Namely, for a pseudorandom generator \(g:\{0,1\}^n\to\{0,1\}^{l(n)}\), \(\forall i \in [l(n)-1]\), \(\forall \mathsf{PPT} A\), there exists a negligible function \(negl(\cdot)\), such that \[ \Pr_{X\gets U_n}[A(f(X)_{[1:i]})=f(X)_{[i+1]}] \le 1/2 + negl(n). \] This is actually quite trivial (which I failed to realize after it was brought up in class, when Prof. Yu noticed me mumbling, as if I knew how to prove it, but it turned out I could not present the complete answer). Suppose that there exists some position \(i\) and a algorithm $A that satisfies the above probability’s negation, then for the construction of pseudorandom distinguisher \(D\), apply the first \(i\) bits of the input to \(A\) and output \(1\) if the predicted bit equals \(f(X)_{[i+1]}\). The probability of \(D\) outputting \(1\) in the case of real randomness is \(1/2\), and in the pseudorandom case, it is \(\Pr_{X\gets U_n}[A(f(X)_{[1:i]})=f(X)_{[i+1]}]\). It is obvious that the advantage of the adversary is non-negligible.

Universal Construction of Hard-Core Predicates: Goldreich-Levin

Theorem

Digested from the handout, we have “It was conjectured that every one-way function has a hard-core predicate, and this was proven by Goldreich and Levin in STOC 1989.” Prof. Yu mentioned that the creativity of this proof is that it uses Chebyshev’s inequality instead of Chernoff bound, which does not require all components are independent, but only pairwise independent. And that reduces the number of guesses needed in the proof, and therefore increases the success probability as well.

The proof given in the handout is the complete proof, unlike in the KL book, where the proof is given in steps, first a simplified case, then the full proof. Prof. Yu did managed to organize the proof in separate independent modules. There are mainly two techniques in this proof, in my opinion. The first one is how to use list decoding to convert a couple of guesses of the hard-core bit into an inversion of the preimage with high probability (\(1/2\) to be specific). The other one is to use Chebyshev’s inequality instead of Chernoff bound, which could reduce the number of guesses needed, ergo increasing the success probability of the inversion algorithm.

There is one remaining problem to be solved. I think the number of guesses \(l=\lceil\log(1+2n/\varepsilon(n)^2)\rceil\) does not guarantees \(2^{-l}\ge\varepsilon(n)^2/4n\) (although $2-l≥ε(n)^2/8n can be guaranteed).

The proof is as follows. Suppose by contradiction there exists a \(\mathsf{PPT}\) algorithm \(A\) such that \[ \Pr_{x\gets U_n,r\gets U_n}[A(f(x),r)=\mathsf{gl}(x,r)]>1/2+\varepsilon(n), \] where the probability is taken over the choice of \(x, r\) and the internal random coins of \(A\). We first argue there exists a set \(S\) of size \(|S| \ge \varepsilon(n)/2\) such that \(\forall x \in S, \Pr_{r\gets U_n}[A(f(x),r)=\mathsf{gl}(x,r)]>1/2+\varepsilon(n)/2\). This is due to Markov’s inequality (not the normal form, since the direction of inequality is inversed). Note that

\begin{align*} \Pr_{x\gets U_n,r\gets U_n}[A(f(x),r)=\mathsf{gl}(x,r)] &=\sum_{x}\Pr[X=x]\cdot\Pr_{r\gets U_n}[A(f(x),r)=\mathsf{gl}(x,r)]\\ &\le\Pr[X\not\in S]\cdot(1/2+\varepsilon(n)/2)+\Pr[X\in S]\\ &\le 1/2+\varepsilon(n)/2 +\Pr[X\in S]\enspace, \end{align*}

which means \(\Pr[X\in S]\ge \varepsilon(n)/2\). Conditioned on \(X\in S\), we construct the following efficient algorithm that can invert \(f\) given \(f(x):x\in S\) with probability no less than \(\varepsilon(n)^2/16n\) (in the handout this is \(\varepsilon(n)^2/8n\) but I think that might have a tiny problem). This reduces our problem to constructing such an algorithm.

The inversion algorithm \(A^\prime\) works as follows. (Let \(l = \lceil\log(2n/\varepsilon(n)^2+1)\rceil\).)

- Uniformly and independently samples \(s^1,\ldots,s^l\gets U_n\), and \(\sigma^1,\ldots,\sigma^l\gets U_1\), where \(\sigma^i\) is a guess for \(\mathsf{gl}(x,s^i)\).

- For every non-empty subset \(\mathcal{I}\subseteq [l]\), let \(r^{\mathcal{I}}\overset{\text{def}}{=}\mathop{\oplus}_{i\in\mathcal{I}}s^i\), \(\tau^{\mathcal{I}}\overset{\text{def}}{=}\mathop{\oplus}_{i\in\mathcal{I}}\sigma^i\). Each \(\tau^{\mathcal{I}}\) is a guess for \(r^{\mathcal{I}}\), because if all the \(\sigma^i\)’s are correct guesses, the resulting \(\tau^{\mathcal{I}}\)’s are also correct guesses.

- For every \(j \in [n]\), make a guess about the \(j^{\text{th}}\) bit

of input \(x\), denoted by \(x_j\), as follows:

For every non-empty subset \(\mathcal{I}\subseteq [l]\), set \(v_j^{\mathcal{I}}:=\tau^\mathcal{I}\oplus A(f(x),r^{\mathcal{I}}\oplus e_j)\), where

\[ e_j \overset{\text{def}}{=}\underbrace{0\ldots0}_{j-1} 1\underbrace{0\ldots0}_{n-j}. \]

Do a majority voting on candidate values \(\{v_j^{\mathcal{I}}:\emptyset \ne\mathcal{I}\subseteq[l]\}\), and let \(x_j^\prime\) be the majority bit of them.

Now we claim that conditioned on \(x \in S\), the success probability of \(A^\prime\) is over \(\varepsilon(n)^2/16n\). First of all, The probability of \(\sigma^1,\ldots,\sigma^{l}\) all being correct guesses is $2-l which is greater than \(\epsilon(n)^2/8n\) (this is where the divergence comes from). Conditioned on that, we now analyze the probability that voting on bit \(x_j\) succeeds. Recall that we have already proved

\begin{align*} \Pr_{X\gets U_n}[A^\prime(f(X)) = X]&\ge\Pr_{X\gets U_n}[X\in S]\cdot\min_{x\in S}\Pr[A^\prime(x)=x]\\ &\ge \varepsilon(n)/2 \cdot\varepsilon(n)^2/8n \cdot\min_{x\in S} \Pr[x^\prime_1=x_1\land x^\prime_2=x_2\land\ldots\land x^\prime_n=x_n]\\ &=\varepsilon(n)/2 \cdot\varepsilon(n)^2/8n\cdot (1- \min_{x\in S} \Pr[x^\prime_1\ne x_1\lor x^\prime_2\ne x_2 \lor\ldots\lor x^\prime_n\ne x_n])\\ &\ge\varepsilon(n)/2 \cdot\varepsilon(n)^2/8n\cdot (1- \sum_{i\in[n]}\Pr[x^\prime_i\ne x_i])\enspace, \end{align*}

where from the second line the probability is conditioned on all \(\sigma^i\)’s are correct guesses and the last inequality is from union bound and the requirement of \(x\) is from the previous equation. It suffices to prove for any \(x\in S\), \(\Pr[x^\prime_i\ne x_i]\le 1/2n\) for all \(i\in [n]\), and we will prove it below.

Note that in the third step of \(A^\prime\), if the \(j^{\text{th}}\) bit of \(x\) is \(0\), then adding \(e_j\) to \(r^{\mathcal{I}}\) does not change the hard-core bit, and the output \(v_j^{\mathcal{I}}\) will be \(0\). On the other hand, if \(x_j = 1\), then the output will be inverted and \(v_j^{\mathcal{I}}=1\). This means \[ \Pr[v_j^{\mathcal{I}}\text{ is correct}] = \Pr[A(f(x),r^{\mathcal{I}}) = \mathsf{gl}(x,r^\mathcal{I})] \ge1/2+\varepsilon(n)/2. \] Let \(m = 2^{l}-1 > 2n/\varepsilon(n)^2\), which is the number of candidates for the majority voting, and denote \(\mathsf{E}^\mathcal{I}\) be the event that \(v_j^{\mathcal{I}}\) is correct. By the construction of \(r^{\mathcal{I}}\)’s, we have that they are pairwise independent. Now we analyze the probability of the event that less than half of the \(v_j^{\mathcal{I}}\)’s are correct, which is

\begin{align*} \Pr[\sum_{\mathcal{I}\in[l]}\mathsf{E}^{\mathcal{I}}<m/2] &= \Pr[\sum_{\mathcal{I}\in[l]}\mathsf{E}^{\mathcal{I}}-m\mu<m/2-m\mu]\\ &\le\Pr[\sum_{\mathcal{I}\in[l]}\mathsf{E}^{\mathcal{I}}-m\mu<-m\cdot\varepsilon(n)/2]\\ &\le\Pr[|\sum_{\mathcal{I}\in[l]}\mathsf{E}^{\mathcal{I}}-m\mu|>m\cdot\varepsilon(n)/2]\\ &\le \frac{Var(\sum_{\mathcal{I}\in[l]}\mathsf{E}^{\mathcal{I}})} {(m\cdot\varepsilon(n)/2)^2}. \end{align*}

The last inequality is due to Chebyshev’s inequality. Note that since each two \(\mathsf{E}^{\mathcal{I}}\)’s are pairwise independent, this means

\[ Var(\sum_{\mathcal{I}\in[l]}\mathsf{E}^{\mathcal{I}}) = \sum_{\mathcal{I}\in[l]}Var(\mathsf{E}^{\mathcal{I}}) \le m\cdot 1/4. \]

Bringing that to the equation above, we have

\begin{align*} \Pr[\sum_{\mathcal{I}\in[l]}\mathsf{E}^{\mathcal{I}}<m/2] &\le \frac{Var(\sum_{\mathcal{I}\in[l]}\mathsf{E}^{\mathcal{I}})}{(m\cdot\varepsilon(n)/2)^2}\\ &\le\frac{m\cdot1/4}{m^2\cdot\varepsilon(n)^2\cdot1/4}\\ &\le\frac{1}{2n/\varepsilon(n)^2\cdot\varepsilon(n)^2}\\ &=1/2n. \end{align*}

And that completes the proof of Goldreich-Levin Theorem.

A Central Theorem in Cryptography

The previous two lemma combined gives us the result one-way permutation implies pseudorandom generators. In fact, a more general statement that one-way function implies pseudorandom generators is also true. This is proved by Håstad et al. in [HILL99]. The proof is much more involved and not suitable for this lecture. Prof. Yu mentioned in class that this construction is more of theoretical interest than of practical value, since the loss in efficiency, although polynomial, is still very large. The simplified cases are that of one-way permutations (which has already been proved), and regular one-way functions (every image has the same number of preimages).

Homework

The handout of this lecture has five homework problems. The first one is to improve the advantage loss in the original proof. The rest of the problems are from KL book.

Improving the Advantage Loss in the Proof of Goldreich-Levin Theorem

What the previous proof proves is essentially assuming some algorithm \(A\) of running time \(t(n)\) can guess the hard-core bit with probability better than \(1/2 + \varepsilon(n)\), then there is another algorithm \(A^\prime\) of running time \(\mathsf{poly}(1/\varepsilon(n),n)\) that can compute \(x\) from \(f(x)\) with probability better than \(\varepsilon(n)^3/16n\) (which is non-negligible if \(\varepsilon(n)\) is). This is a stronger guarantee than the one required in one-wayness experiment, where only \(x^\prime \in f^{-1}(f(x))\) is needed. If we add a simple check after \(A^\prime\) output \(x^\prime\), and re-guess \(x\) with fresh randomness if \(f(x^\prime)\ne f(x)\), we can increase the success probability to \(\varepsilon(n)/4\).

There is a detail that need to be paid attention to in the previous argument. The first probability that \(x\in S\) only considers the very preimage \(x\), while in the modified proof, we need to consider all other preimages. Actually, this is not a problem, since the only information that \(A\) learns is \(f(x)\), and that is the same for all other preimages \(x^\prime \in f^{-1}(f(x))\). Conditioned on \(x\in S\), we can safely conclude that all other preimages are in \(S\).

The other point I think may be a caveat is that by repeating the guessing process, we need to calculate the expected running time of \(A^\prime\), since it’s halting is probabilistic now. This should not be a big problem, since the success probability of one guessing is more one over some polynomial, and the inverse is smaller than that polynomial, on infinitely many \(n\)’s. Despite this, the specific halting strategy should be specified to make sure \(A^\prime\) runs in polynomial time.

Exercise 6.1 from KL Book

For \(f(x,y) = x+y\), output \((x+y,0)\) on the output; for \(f(x)=x^2\), just use interpolation to find \(x\).

Exercise 6.2 from KL Book

Assuming \(f:\{0,1\}^n\to\{0,1\}^{l(n)}\) is a one-way function (family), it follows from one-wayness that for all \(\mathsf{PPT}\) algorithm \(A\), there exists a negligible function \(negl(\cdot)\) such that \[ \Pr_{X\gets U_n,x^\prime\gets A(f(X))}[f(x^\prime)=f(X)] < negl(n). \] Now construct another function \(f^\prime:\{0,1\}^n\to\{0,1\}^{l(n)}\) that behaves exactly like \(f\) except on \(x=0^n\), where it outputs \(0^{l(n)}\). For any \(\mathsf{PPT}\) algorithm \(A\), the success probability of inverting \(f^\prime\) is

\begin{align*} \Pr_{X\gets U_n,x^\prime\gets A(f^\prime(X))}[f^\prime(x^\prime)=f^\prime(X)]\leq2^{-n}+\Pr_{X\gets U_n,x^\prime\gets A(f(X))}[f(x^\prime)=f(X)]\enspace, \end{align*}

which is still negligible. This means \(f^\prime\) is also a one-way function. The KL book maybe tries to tell me one-wayness is a statistical result, changing one term will not essentially change the overall characteristics.

Exercise 6.4 from KL Book

The proof is trivial.

Exercise 6.6 from KL Book

If \(f(\cdot)\) is a one-way function, then \(g(x) = f(f(x))\) is a one-way function. This is because \(f\) is efficiently computable, and therefore it is easy to construct an algorithm to invert \(f\) given an algorithm to invert \(g\). The second part is essentially the same.

Pilot Course

Basic Information

- Lecturers: 郁昱, 刘振

- Canvas website: oc.sjtu.edu.cn/courses/19984

Outline

Testing Signing in (not accounted as score reference)

- Since this is only a testing course, absence will not be penatied.

Testing Zoom website

Begin at exactly 8:55

15 students enrolled

Class Outline

- Classical Cryptography

- Modern Cryptography

- Post-quantum Cryptography

- Concluding Remarks

Natural Proof: inbetween area between security and insecurity.

Correspondence between crypto, complexity and math

| Cryptography | Complexity | Math |

|---|---|---|

| PRG, Stream Cipher | PRG, Derandomization | |

| PRF, Block Ciphers, Authentication | Hardness of learning, Natural proof barrriers | |

| Privacy amplification | Randomness extraction | Expanders, Ramsey Graphs |

| Succinct ZK arguments | PCP, Locally testable Codes | |

| Leakage-resilient crypto | Dense model theorems, hardcore sets | primes in arithmetic progression |

| Obfuscation | Hard search problems | |

| private information retrieval | locally decodable codes | extremal set theory |

| HE, homomorphic secret sharing | locally random reductions, program checking |

Lecture 1

Introduction

Not all slides will be uploaded to canvas prior to the lecture.

Provable security is an important aspect of modern cryptography.

Prerequisites:

- read formal proofs

- analyze complexity of algorithms

- familiarity with basic probability theory

Textbooks:

- KL book

- Foundations of cryptography

- research papers

What is Cryptography

Cryptography is a part of modern theoretical computer science. Pseudorandomness, communication complexity, and ZK are also useful tools in TCS in general.

Godel prize topics:

- Natural proof – problems that are hard to prove or disprove, natural proof proves that the proof itself is hard.

Cryptography is not all like mathematics. In crypto, people are only concerned with moderate problems, and efficient algorithms.

More on that table:

| Cryptography | Complexity | Math |

|---|---|---|

| PRG, Stream Cipher | PRG, Derandomization | |

| PRF, Block Ciphers, Authentication | Hardness of learning, Natural proof barrriers | |

| Privacy amplification | Randomness extraction | Expanders, Ramsey Graphs |

| Succinct ZK arguments | PCP, Locally testable Codes | |

| Leakage-resilient crypto | Dense model theorems, hardcore sets | primes in arithmetic progression |

| Obfuscation | Hard search problems | |

| private information retrieval | locally decodable codes | extremal set theory |

| HE, homomorphic secret sharing | locally random reductions, program checking |

Classic Cryptography

Traditionally the definition is about secure communication, while the modern verison is more versatile.

Basic primitives:

- one-way function: for randomly chosen pre-image, it is hard to find any pre-image correponding to the image.

- pseudorandom generator: randomness amplification

- pseudorandom function: PRG, but even better

Some basic tasks:

- encryption: transformation of message that protects message against evasdroppers

- MAC/Signature: protect message integrity the previous is the symmetric case while the latter one is public-key case

- Secure computation: protect input privacy while preserving functionality

Applications:

- Secure communication

- authetication

- digital signature (MAC, but better)

- privacy-preserving computation: MPC, HE, Obfuscation, etc.

Obfuscation originates from software code protection, crypto community wants to formalize it and enhance upon it, but so far no satisfying results.

- Combination of previous tools

Symmetric Key Encryption (SKE)

A triplet of algorithms

- Key Generation: \(k \gets KeyGen(1^\kappa)\)

- Encryption: \(c \gets Enc(m, k)\)

- Decryption: \(m^\prime \gets Dec(c, k)\)

Symmetric in the sense that the two parties share common secret knowledge beforehand.

Properties:

- Correctness: BPP or P

- Security: passive or active, computaitonally bounded or unbounded (not like oracles)

Examples in classical cryptography:

Caesar Cipher, Enigma

Shannon’s OTP

Just operations on the GF2 field. Perfectly secure in the sense that ciphertext is independent of message. In other words, the mutual information between ct and message is zero.

Perfect secrecy’s limitation: \(|K| = |M|\)

Shannon’s Entropy

\(H = \sum_i p_i \cdot \log(1/p_i)\) \(I(X;Y) = H(X) - H(X|Y)\)

Adversarial Model

- Cihpertext-only attack

- known-plaintext attack

- chosen-plaintext attack

- chosen-ciphertext attack

How to argue security

Using reduction based proofs. If problem A is computationally hard, then crytpo system B is secure against PPT adversaries. In practice, this is done through showing that algorithm for B can be efficiently converted to algorithm for A.

Public Key Cryptogrpahy

Also a triplet of algorithms

- Keygen

- Encryption

- Decryption

Definition of security: CPA game.

Modern Cryptography

Modern crypto bases security upon computationally hard problems, which is what PKE do. But SKE normally does not have this requirement (e.g. AES, DES).

The dawn of modern cryptogrphy: DH, RSA.

Decisional DH assumption: \((g^x, g^y, g^{xy}) \sim (g^x, g^y, g^z)\)

Pseudorandomness to break shannon’s barrier: BMY generator (hybrid argument)

The hierarchy of cryptography

- Algorithmica: \(\mathcal{P}=\mathcal{NP}\)

- Heuristica: \(\mathcal{P}\neq \mathcal{NP}\)

- Pessiland: no OWF

- Minicrypt: exists OWF

- Cryptomania: exists PKC and MPC

One-way functions: the minimal assumption for cryptography. Notice this is in the average case sense. HILL theorem states that OWF implies PRG.

Not all cryptographic primitive has secret

CRHF: hard to find collision for a random instance of hash function. \[\Pr_{h\gets H}[A(h)=(x,x^\prime): x\neq x^\prime, h(x) = h(x^\prime)]\]

Practical instantiations of CRHF: MD5, SHA1, SHA256, SHA3

But what about a family of algorithms? This is due to definitional problems.

Two party computation

Garbled circuit in [Yao82b]. For practical application, c.f. Netherland sugar beat auction case.

The Quantum CRYSIS

- Shor’s algorithm: solves DL and Factoring in poly-time

- Grover’s algorithm: general quadratic speed-up

It is believed that \(BQP \ne NP\).

NIST PQC candidate announcement.

``GOOD’’ assumptions include:

- Lattice-based: e.g. LWE

- Code-based: e.g. LPN

- Hash-based: limited to digital sinature

- Multivariate: no provable security?

LWE and LPN problem, solving noisy equations over finite-field, similar to solving equations over finite-field (plain elimination) or solving noisy equations over infinite-field (projection), but actually different.

Decoding Random Linear q-ary codes. Message is \(s\), generation matrix is \(A\), noisy codeword is \(A \cdot s + e\). This problem is NPC in the worst case, also hard in the average case (the famous reduction).

LPN: sub-exponential algorithm exists but that’s all for the status quo. BKW: time complexity is \(2^{n/\log n}\) for constant noise rate.

LPN-based PKE (one-bit)

Alice (the receiver): \(a\gets \{0,1\}^{n\times n}\), \(sk = s^T\), \(e^T \gets B_\mu^n\) \(pk = (a, s^T \cdot a + e^T)\)

Bob (the sender): \(s_1,e_1\gets B_\mu^n\) \(c_1 = a\cdot s_1 + e_1\), \(c_2 = b^T \cdot s_1 + m\)

Security is easy to prove since all the messages are LPN instances or hard core bits.

Piling-up lemma: for \(x,y\gets B_\mu^{2n}\): \(\Pr[x^T\cdot y = 0]\ge 1/2 + 2^{O(-n\mu^2)}\).

Lecture 2

Recaping Last Lecture

CRHF

Hard to find collision, and it can be based on several mathematical assumptions. Another caveat is that in this definition, we consider a family of functions \(\mathcal{H}\), while in practice we only have only one algorithm, e.g. SHA256, MD5.

Note that in non-uniform model the collision can be modelled as auxiliary input, and thus the one-algorithm definition can be trivially broken.

Multiparty Computation

Nothing is leaked beyond the input and output information.

Decoding Random Linear Code

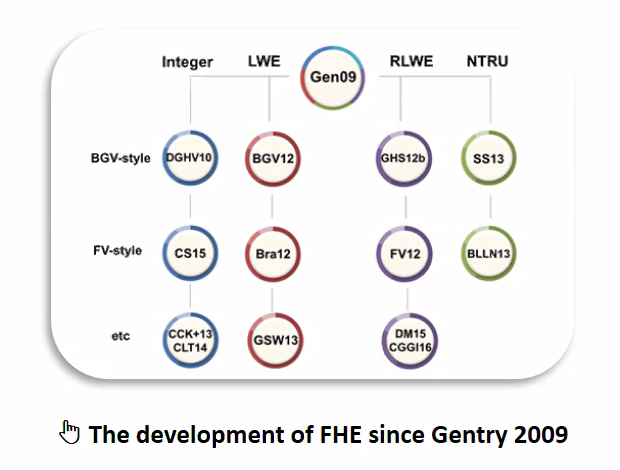

Lattice / LPN based assumptions are these types of assumption. The latest achievement based on these assumptions are Gentry in STOC 2009.

Introducing the homomorphic evaluation function \(\mathsf{Eval}(pk, f, c_1,\ldots,c_t)\)

Important Limitation: Complexity of decrypting \(c^*\) must not depend on the complexity of \(f\). Otherwise, one trivial solution is to keep all the ciphertext inputs and the description of function f, then in decryption, just decrypt and then evaluate. This goes against the goal of computation outsourcing.

Cryptography is full of conjecture, surprisingly equivalence, and impossibility results.

A good / famous example is Alice’s Jewelry Store. Worker (server) works (evaluates) on raw materials (inputs) to produce jewelry (output).

This is like MPC, but only one round of communication, very efficient in communication.

Pre-2009 schemes are so called somewhat homomorphic, not fully.

Preliminaries of Provable Security

Writing a formal proof

- Conditionally

- by a reduction

- Unconditionally

- constructively or existentially

Most crypto proofs are conditional, the minimal assumption is the existence of one-way function. And the existence of OWF considers average-case hardness (most instances are hard), while \(\mathcal{P} \ne \mathcal{NP}\) considers worst case hardness.

Though seemingly groundless, conjectures like RSA seems robust enough. A French team successfully conducts cryptanalysis on ~ 800bits RSA.

Unconditionally proof usually involves information theory. Constructive results are surely more satisfying than existential solution (which only provides one-bit).

- Disproof

- provide one counterexample is sufficient

- Proof hard to prove

- shows that the proof itself is hard to come up with.

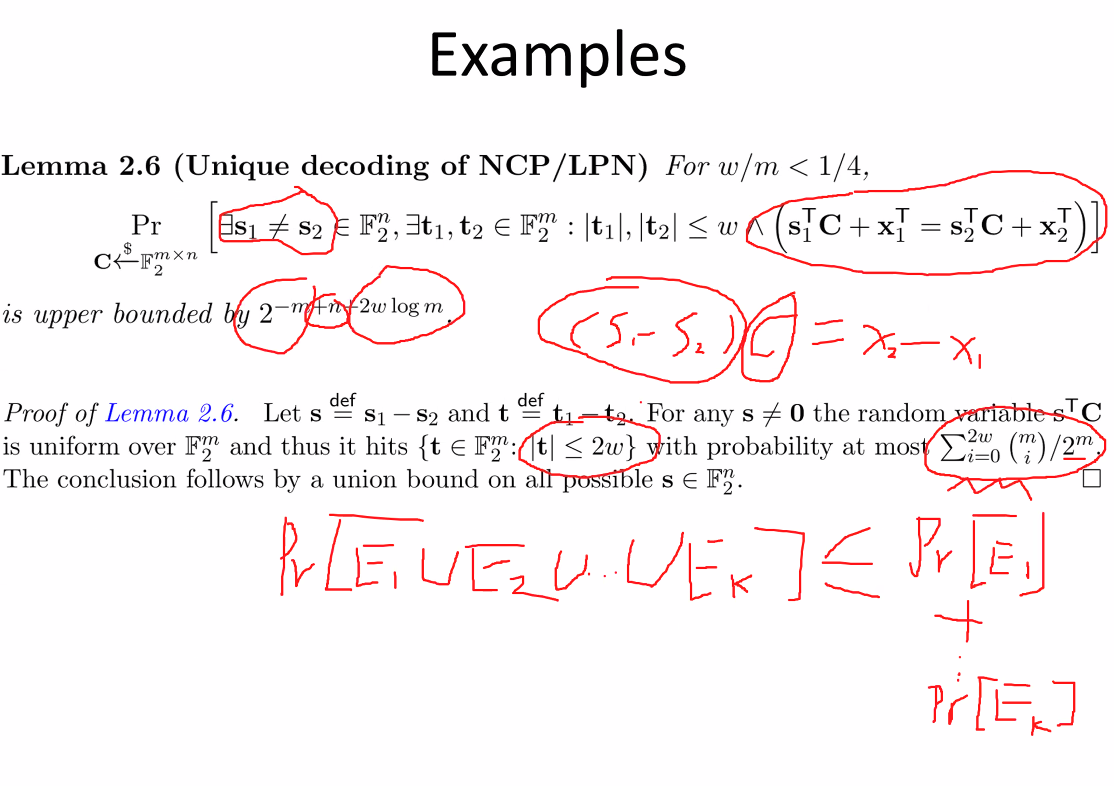

Examples

- Shows the number of primes are infinite Use simple proof technique

- For sequentially ordered primes \(p_1,\ldots,p_n\), is \(p_1\cdot p_2 \cdot \ldots \cdot p_n \pm 1\) prime as well? No, the prime list is not complete. Related problems are twin prime conjecture.

- Binary linear code. A binary (m,n)-code has dimension n and length m. The generator matrix is of size n by m.

Nearest Codeword Problem (NCP): given the generator matrix C and noisy codeword \(t^T = s^T \cdot C + x^T\). Noise x is limited by its hamming weight which is exactly \(d\). The solution is \(s^\prime\) such that

\[ s^T \cdot C + x^T = s^{\prime T} \cdot C + x^{\prime T} \]

Show most linear codes have unique decoding when codeword length \(m\) is large enough.

Figure 5: Proof of Unique Decoding of LPN

This is an example of existential probablistic proof.

Notations on rv’s and sets

Sets \(\{0,1\}\) is the most common one.

Notations of probability distribution

- non-negativity

- maps from sample space to real number in [0,1]

- add up to one

Random variables

very similar to distribution

Events and Independence

Event is actually a subset of sample space, independent events are two “regular” subspace. By regular I mean conditioning on one event the other one has the same probability.

Independent variables: for every possible value, the corresponding events are independent.

Polynomial and friends

Efficient algorithm means polynomial-time computable. In cryptogrpahy, we want the problem for adversary is super-polynomial hard while the success probability is some inverse of super-polynomial function.

A function \(f\) is superpolynomial if for every constant \(c>0\) and all sufficiently large \(n\) we have \(f(n) > n^c\).

Negligible function is the inverse of some superpolynomial. E.g. \(2^{n/2}\), \(n^{\log n}\)

Overwhelming and non-negligible

- overwhelming

- 1 - negligible

- non-negligible

- not negligible (larger than some polynomial for infinitely-many n’s)

- noticible

- larger than some inverse of polynomial

Example of non-negligible but non-noticible function: “punch-out” function at all even / odd points.

Asymptotic functions

big-oh notations.

| Asymptotic | Meaning |

|---|---|

| \(o\) | \(\leq\) |

| \(\omega\) | \(\geq\) |

| \(\theta\) | \(=\) |

| \(o\) | \(<\) |

| \(\omega\) | \(>\) |

Function Ensembles

Different functions for different problem scale.

Example: one-way function

Usual mathematical objects are functions of the security parameter.

Union bound and Markov inequality

Union Bound:

Markov Bound: \(\Pr[X \ge \delta \mathbb{E}[X] ]\le 1/\delta\)

Chebyshev’s Inequality

\[ \Pr[|X-\mu|\ge \delta\sigma] \le 1/{\delta^2} \]

Chernoff bound and Hoeffding Bound

There are additive form and multiplicative form

Piling up Lemma and misc

We have \(\Pr[\bar{x} = 1] = 1/2 - 1/2 \cdot (1 - 2\mu)^n\)

Extreme cases: \(\mu = 0\) nothing changes; one \(\mu = 1/2\) then the result is random.

- Fact 1.

- For any \(0\le x \le 1\) it holds that \(\log_2 (1+x) \ge x\). For any \(x > -1\) we have \(\log (1+x) \le x / \ln 2\)

- Fact 2.

- Binominal approximation

ANOTHER HOMEWORK on the existence of independent code

Lecture 3

How to do last lecture’s homework

If C is a random matrix, then Cr is uniformly random. The answer will not be distributed today, though.

Statistical distance / indistinguishability and the LHL

First define the indistinguishability experiment.

There are three levels of security:

- Unconditionally secure / information-theoretically secure

- advantage has zero advantage

- Statistically secure

- adversary has negligible advantage

- Computationally secure

- pseudorandomness, etc.

The experiment here is about private key encryption

Figure 6: Private Key Encryption Experiment

The adversary’s advantage is \(\mathsf{Adv}{eav}{\mathcal{A},\mathcal{P}i} = \Pr[b^\prime = b] -1/2\) Notice here the adversary is considered to be all-powerful, and so it can determine which random coin is the most advantageous, and so it doesnot has to be a randomized algorithm, or to keep state information.

The above algorithm can also be transformed into an indistinguishability game, such that the same \(\varepsilon\) is still the bound. We can use conditional probability to prove this equivalence.

One-time pad is perfectly secure

OTP is defined over \(\{0,1\}^n\), we want to prove that this is perfect-secure. And it is so simple, since any two ciphertexts are distributed identically, so any adversary’s advantage is zero.

Statistical Distance

For two distributions X,Y, the statistical distance is defined as:

\[\mathsf{SD}(X,Y):=1/2 \sum_x \left|\Pr[X=x]-\Pr[Y=x]\right|\]

And we can define conditional statistical distance \(\mathsf{SD}(X,Y|Z):= \mathsf{SD}((X,Z),(Y,Z))\).

The 1/2 in the definition is to normalize the output to the range of [0,1].

SD provides a upper bound of the advantage of distinguishing two distributions. This can be seen as \(D\) defines a distribution on the support. The best we can do is to let it maximize the success probability (in the distinguishing game) on every input.

SD is a metric since it has:

- non-negativity

- identity of indiscernibles

- symmetry

- \(\mathsf{SD}(X,Z) \leq \mathsf{SD}(X,Y) + \mathsf{SD}(Y,Z)\) Furthermore, it has the following property:

- the equality holds when supports of \(X,Y\) are disjoint.

- for every function \(f\), \(\mathsf{SD}(f(X),f(Y))\leq\mathsf{SD}(X,Y)\)

Statistical Security for OTP

An application of replacement lemma can prove the indistinguishability when replacing the key distribution from uniformly random to statistically close to uniformly random. This can be understood intuitively by that the two ciphertexts are both \(\varepsilon\) close to the uniform distribution, and they are at most \(2\varepsilon\) far away.

Entropy

Renyi entropy of order \(\alpha \ge 0\) and \(\alpha \ne 1\) is defined as: \[H_{\alpha}(X):=\frac{1}{1-\alpha}\log\left(\sum_{i}p_i^\alpha\right)\]

- \(\alpha = 0\)

- we have max-entropy;

- \(\alpha = 2\)

- we have collision entropy, i.e. \(-\log(\sum_i p_i^2)\);

- \(\alpha \to 1\)

- it converges to Shannon entropy;

- \(\alpha \to \infty\)

- we have min-entropy, minus log of the maximum probability.

A classical example: a key that has 1/2 probability to take all zeros, and the other cases uniform over all other possibilities. It has about n/2 Shannon entropy, but still a very bad key source. We should instead focus on min-entropy.

Two lemmas about indistinguishability

Pilling-up lemma